Open Learner Modelling as the Keystone of the Next Generation of Adaptive Learning Environments

R. Morales , N. Van Labeke , P. Brna , M. Chan

Abstract

Learner models, understood as digital representations of learners, have been at the core of intelligent tutoring systems from their original inception. Learner models facilitate the knowledge about the learner necessary for achieving any personalisation through adaptation, while most intelligent tutoring systems have been designed to support the learning modelling process. Learner modelling is a necessary process to achieve the adaptability, personalisation and efficacy of intelligent tutoring systems. This chapter provides an analysis of the migration of open learner modelling technology to common e-learning settings, the implications for modern e-learning systems in terms of adaptations to support the open learner modelling process, and the expected functionality of a new generation of intelligent learning environments. This analysis is grounded on the authors’ recent experience on an e-learning environment called LeActiveMath, aimed at developing a web-based learning environment for Mathematics in the state of the art.

INTRODUCTION

The history of the use of computers for training and education started soon after the introduction of the first commercial computers. For some time, research and development in this area have been under the influence of two main visions: one which sees information and communication technologies as useful tools for improving people's access to learning resources and enhancing their teaching and learning experiences, and another one which sees computers as intelligent agents playing a proactive role in the educational context, much as students, teachers and tutors do. Practitioners strongly influenced by the first view have been mainly concerned with developing systems that can make the ever-evolving information and communication technologies more useful for training and education. In contrast, practitioners strongly influenced by the second view have been mostly interested in enhancing the learning experience by making computers as flexible and supportive of learning as human tutors are capable of being (ADL, 2001; Gibbons & Fairweather, 2000).

Widespread implementations of the first approach, current e-learning systems such as learning management systems based on content, metadata and web technologies, are mostly designed to make information and learning materials easily available to a broader audience, while providing a set of tools for supporting, and hopefully enhancing, human-to-human communication. Their way of supporting learning, however, usually combines two simple models: provision of a rigid and predefined path through educational and informational materials, and allowing free content browsing and choosing. The danger of this approach, of course, is to replicate the traditional and ineffective educational approaches of one serves all and unsupported consumer freedom on a massive scale. On the contrary, Intelligent Tutoring Systems (Polson & Richardson, 1988; Wenger, 1987), as products from the second approach, have always cared for their learners as individuals and they have used adaptation and personalisation as essential mechanisms for achieving their purpose of promoting better learning by their users (Self, 1999). Nevertheless, intelligent tutoring systems have mostly stayed in their designers' laboratories, due to the difficulty of scaling them to more realistic settings and integrating them with other educational system (Picard, Kort, & Reilly, 2007).

Learner models, understood as digital representations of learners, have been at the core of intelligent tutoring systems from their original inception (Carbonell, 1970). Learner models facilitate the knowledge about the learner necessary for achieving any personalisation through adaptation, while most intelligent tutoring systems have been designed to support the learning modelling process: a win-win strategy that have produced many successful systems in terms of their efficacy to improve learning. Learner modelling is a necessary process to achieve the adaptability, personalisation and efficacy of intelligent tutoring systems. Consequently, we need to introduce this same process into modern e-learning environments, and adapt it to its new working conditions, if we want an equivalent functionality in these systems (Brooks, Greer, Melis, & Ullrich, 2006; Brooks, Winter, Greer, & McCalla, 2004; Brusilovsky, 2004; Devedzic, 2003). Furthermore, a variation of learner modelling in which the learner plays an active role in the modelling process, known as open learner modelling (Morales, Pain, Bull, & Kay, 1999), sets the context for system and learners (and even other actors in the learning process, such as teachers) to discuss through suitable user interfaces the knowledge, preferences, motivational dispositions and other aspects of the learner as “believed” by the system. Beliefs can be inspected and negotiated (Bull, Brna, & Pain, 1995), leading to a better picture of the learner – or, at least, to a learner model which is known by the learner and the learner agrees more with. Learner reflection and awareness of their own conditions are promoted through this process, leading to a better informed learner that can make better decisions on what do to next (Cook & Kay, 1994), and preparing the path for the system to make suggestions based on its inspected, justified and negotiated beliefs.

In this chapter we provide an analysis of the migration of open learner modelling technology to common e-learning settings, the implications for modern e-learning systems in terms of adaptations to support the open learner modelling process, and the expected functionality of a new generation of intelligent learning environments. This analysis is grounded on the authors’ recent experience on an e-learning environment called LeActiveMath, the main product of a European-funded research project aimed at developing a web-based learning environment for Mathematics in the state of the art.

The second section of this chapter sets the context of our work with a description of the general characteristics of the educational model of e-learning, which is followed by the proposal of a new educational model for next-generation intelligent learning environments with integrated open learner modelling technology. The third section is devoted to a brief presentation of the salient characteristics of the LeActiveMath system as representative of a general class of modern e-learning systems. The fourth section focuses on learner modelling in LeActiveMath. It includes a presentation of the motivations and issues addressed in the project, the inspection/challenge aspects of its open learner modelling, its scope and limitations. In the fifth section we explore the generalisation of the open learner modelling approach followed in LeActiveMath to the broader class of systems LeActiveMath represents. The seventh section contains a brief report of recent approaches to learner modelling and evaluation results. The chapter ends drawing some conclusions on the state of the art and sketching future research directions.

THE E-LEARNING EDUCATIONAL MODEL

There are at least five educational traditions that converge into common e-learning systems nowadays: expositive teaching, programmed instruction, distance and continuous education, administration and multimedia-based didactics.

Expositive teaching is certainly the most entrenched tradition in education. It gives the teacher the task of presenting information and carrying out demonstrations to a learner whose function is reduced to capture the information and transcribe it, only to give it back through exercises and assessment. In e-learning this means that educational materials have an expositive nature, with a minimum level of interaction, and the e-learning environment is an information container. Criticism to expositive teaching is widespread. Other approaches, such as discovery learning, the constructivist models, as well as the perspective of education communication have been developed mostly in opposition to frontal, expositive teaching. Influential authors that have lead movements against expositive teaching are, among others, Ausubel (1995), with significant and discovery learning, and Freire (1999) with his notion of “banking education” as an analogy to the informational deposit in expositive teaching.

Programmed instruction is an educational method based on educational materials with detailed step by step instructions for learner actions. Questions are provided as well to assess learner progress in acquiring the information from the materials. Regarding instructional design, it is the teacher who sets the materials and their sequencing, defines assignments, evaluates and generally dictates to the learner what to do. The e-learning environment acts as a space for delivering instruction and the assessment of information transfer. Programmed instruction was the first use of computers for teaching in the 70’s, and it is the antecedent to closed instructional design based on structured content and previously defined closed questions and answers (Gibbons & Fairweather, 2000).

E-learning has also been developed using instructional models from traditional distance education, based on detailed description of the sequence of activities to be carried out by the learner and carefully designed characterisations of their products, under the assumption that there would be little support from teachers for the learning process – given that communication in between learners and teachers in traditional distance education was mostly by post. Elearning that has evolved from traditional distance education settings towards the use of learning management systems (LMS) has maintained its pedagogical emphasis on the careful disposition of directions for activities to be carried out outside of the learning management system, considering the latter only as a medium to deliver instruction, not as an environment for teaching and learning (Bates, 2005).

From the management view of education e-learning imports its emphasis on organisational tools and spaces, such as agendas, programmes, content repositories and drop boxes for assignments and feedback (Chan Núñez, 2004). Information and communication technologies become the new raw materials for building organisational tools and very little else. On the other hand, a focus on the rich expressiveness of the new media as a tool for delivering information through all learner senses can be observed among the more significant didactical applications of new technologies. Learners are seen as a free explorer of educational materials in the context provided by the organisational tools.

Together, the five traditions outlined above exert their influence on the conception of elearning environments as containers of educational materials to be browsed by, or detailed instructions to be executed along predefined paths by a preconceived hypothetical learner.

Interactivity and Self-management

There are two concepts that have been reduced in their meaning as qualities of learning in virtual environments: interactivity and self-management. The execution of tasks by the learner, following the predefined path set in a learner management system, has been understood as self-managed because the learner makes decisions limited to the amount of time assigned to each one of them, behaving in a more or less disciplined way along the course. The learner decides on these behaviours, but not on the trajectories and contents. In this approach, the concept of self-management is reduced actually to the development of study habits, the discipline to fulfil tasks, and the responsibility to undertake each activity indicated. Selfmanagement is therefore executed in a frame of provisions, decided by educators, which do not necessarily respond to the interests, necessities or capacities of the learner. So, what is it that the learner really manages?

Another term that is applied indiscriminately in e-learning is the one of interactivity. From a computational and informational perspective, a system exhibits interactivity if it allows for information flow in both directions with its user. However, from a perspective of meaning, we should distinguish between interactivity and interaction, the latter requiring not only information exchange but also a mutual influence between the subjects in the communication process. In courses with a design that guides the actions of the learner we can observe reactions of the learner as responses to predefined and anticipated situations, but their actions have no effect on the planning of the course.

In e-learning we have got to consider the systematic design of courses as a precious quality, but then e-learning ends up as a collection of closed systems. There is little space or time for the learner’s perception of their own learning, or the recognition of what they have achieved, let alone their necessities and interests.

Towards a New Educational Model for e-learning

In contrast to the “traditional” e-learning model depicted above, we would like to propose a new model based on the principles of self-management, creativity, signification and participation (UDG Virtual, 2004). That is to say at least three things: (1) all four principles should be identifiable as characteristics of learners in e-learning environments, especially after some time of being exposed to the model, (2) the design of the environment should be guided by them, and (3) teachers/tutors should undertake their tasks caring for the same principles.

Learner’s self-management is understood here as their achievement of security and selfconfidence, as their capacity to make decisions and be the driver of their own learning process, and as their commitment towards their own being and the tasks that fall under their responsibility. Creativity is understood as the capacity of a person to identify problems, to generate alternative ideas about the problems, to find alternative solutions, to express themselves and to innovate. Signification articulates itself with creativity as far as it assumes involvement with problems and their solutions, grounding of concepts on experience and capacity to generalise the acquired knowledge and transfer it to new situations. Finally, participation is understood here as cooperation, collaboration, and team work.

The principles of self-management, creativity, signification and participation are supported mainly by two theoretical approaches to learning: cognitivism and social constructivism (Ausubel, 1995; Vygotsky, 1996). These positions coincide with premises about competency-based learning (Gonczi & Athanasou, 1996), among others: learning by doing, learning by getting involved in tasks with a meaning for the (social) learner, role playing in a team carrying out collective tasks and project-based learning (Reigeluth, 1999).

We believe that open learner modelling can perform a critical role in the development of self-management, signification, participation and even creativity in learners; that its place is the centre of the e-learning environment, as its main access and meeting point. Through it, learners would be able to inspect their learning process and their achievements, to engage with them through challenging and negotiating the system view of it (in a general sense, including the views of others such as teachers and peer learners), to gain in security and selfconfidence through visualising and reflecting on their progress. Through open learner modelling, learners would get support from the environment on their learning in a justified manner (e.g. ‘you can see I believe, for good reasons, that you are very close to mastery of this competency, so I recommend you to practice it a bit longer and then reflect on what you have done and achieved’), so they can make informed decisions on their learning process.

Open learner modelling needs to evolve in order to meet this challenge, and our work on the LeActiveMath project can be seen as a move in this direction.

THE LEACTIVEMATH PROJECT AND SYSTEM

The LeActiveMath project was born from a desire to improve the support given to learn mathematics by ActiveMath, a ‘generic and adaptive web-based learning environment’ (Melis et al., 2001) which used a book metaphor to present educational content for learners to choose from on the basis of their profile (e.g. educational level and field of studies), learning goals and scenarios (e.g. learning a topic for the first time, revising it or preparing for an assessment). The system used an XML-based knowledge representation for encoding mathematical documents and was clever enough to use it to choose the content that fit the learner’s profile and request, and to present it in a variety of formats, achieving in this way a primitive sense of personalisation. The LeActiveMath project (LeActiveMath Consortium, 2007) aimed to transform ActiveMath into a next generation intelligent learning environment, in which the learner could take the initiative in their active and exploratory learning of Mathematics while the system supported them through a variety of mechanisms for adaptation and personalisation, from intelligent feedback and tutorial dialogue, through content suggestions to open learner modelling.

The new system, called LeActiveMath, fits the description given by the Advanced Distributed Learning Initiative (ADL, 2004b) for a second-generation e-learning system that combines a modern content-based approach from computer assisted instruction with adaptive educational strategies from intelligent tutoring systems. This mixture of approaches produced tensions during the design of the system in general, but particularly during the design of its learner modelling subsystem since this has to support a wide range of adaptive educational strategies, from coarse-grain book construction to tailored natural language dialogue, but with a general lack of something traditionally afforded in intelligent tutoring systems: painfully designed and dynamically constructed learning activities capable of providing large amounts of detailed information about learner behaviour. A learner model working in these conditions has to deliver more with less. It has to be able to answer questions about the learner on the basis of sparse information without pursuing blind over-generalisation.

Content-based e-learning

As in many other e-learning systems, LeActiveMath makes heavy use of pre-authored educational content to support learning, aiming to capitalise in this way from the efforts and expertise of a variety of authors at producing standardised educational materials. However, a big disadvantage of this approach is that educational content is for the most part opaque to learner modelling, in the absence of a domain expert subsystem to query about what is inside the content. The information available is hence reduced to the one explicitly provided by authors in the form of metadata, which is not ideal for reasons explained below. This configuration is common in content-oriented systems such as popular commercial and open source learning management systems, yet it is a bit paradoxical in the case of LeActiveMath, given the fact that its educational content is encoded in a language designed to represent the semantics of statements (Kohlhase, 2005), which is nevertheless used at large for providing links to other pieces of content (e.g. reference content) and for supporting multiple presentation formats (like HTML, MathML, PDF and SVG).

Content in e-learning systems tends to come in relatively big chunks with little flexibility, as compared to finer grained and highly flexible “content” traditionally found in intelligent tutoring systems. An advantage of the former approach is that the system is provided with a small number of big components, instead of a big number of small ones, to put together in a coherent way. As with jigsaw puzzles, the task in the first case is generally easier than in the second case. On the other hand, and from a learner modelling point of view, most pieces of content are hardly adaptable to suit the dynamic needs of the ongoing modelling task, while information about learner behaviour and performance tends to come, if at all, in big summary chunks at the end of the learner's interaction with each piece of content.

Metadata

As mentioned above, the absence of a domain expert inside an e-learning system such as LeActiveMath forces a learner modelling component to work on the basis of content metadata. There are at least three problems in this way of proceeding that need to be addressed. First, metadata is a heavy burden on authors since it amounts to do work twice: to say the thing and to say what has been said. The more detailed and accurate the metadata, the more extra work has to be done. Automatic production or verification of metadata would be very helpful, but it amounts to introducing some expensive domain expertise into the system or authoring tool.

Secondly, metadata tends to be subjective. Although there could be a lot of commonality in two experts view of their subject domain, there are also differences which sometimes are serious. Hence two authors could easily provide different metadata for equivalent pieces of content. The more flexible is the addition of new content and metadata to the system, the higher the diversity in criteria for defining metadata. A learner modelling component in these conditions must be tolerant of diversity.

Thirdly, metadata lacks details. A book's record in a library catalogue is never the same as the book itself, and this applies to metadata of electronic content as well. What matters to include as metadata is defined beforehand, whilst filling gaps later can be very expensive. A well-intentioned driver towards standardisation of metadata gets thwarted (from a modelling point of view) given the shallowness of current metadata standards such as LOM (IEEE, 2002).

Navigation Freedom

Guidance to learners through educational content in LeActiveMath, as well as many other elearning systems, jumps between two extremes: predefined paths and content browsing. LeActiveMath contains a handful of predefined “books” on different areas of mathematics to be followed by learners, and learners can define new books according to their own goals. Once a book is defined, it can change only by the addition of new content recommended by the system from time to time. The learner has two choices: either to follow the books in the recommended order or to browse their content at will, with no further guidance other than a table of contents with indications of progress.

From a learner modelling perspective, both situations are for the most part equivalent, since neither of them accommodates the presentation of new content materials to the modelling needs. Whereas in intelligent tutoring systems, learner modelling can be used to lead the learner's progress through the subject domain, in e-learning it has to be opportunistic: taking advantage of whatever information is available at any time.

THE EXTENDED LEARNER MODEL

Along the LeActiveMath project we developed a learner modelling engine to support the new adaptive features of the LeActiveMath system, as well as to explore the possibilities of open learner modelling in this new context. We called it the Extended Learner Model (xLM) for reasons that will be apparent later. It was designed to deal with and benefit from the features of its host system but, nevertheless, it was expected to be easily detachable from it to serve similar e-learning systems, either as an embedded component or by offering its services on the Web.

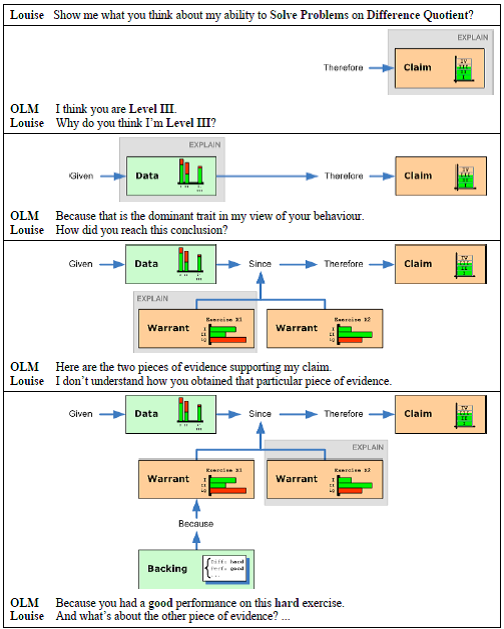

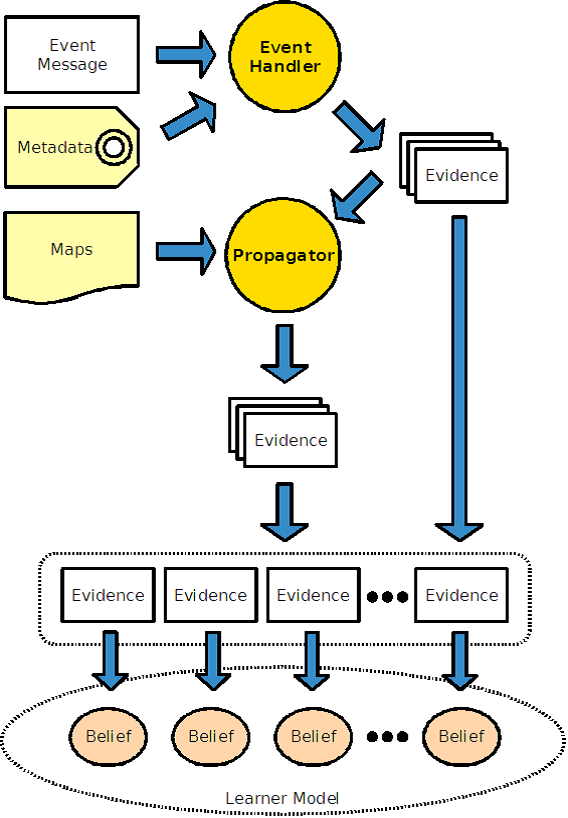

Figure 1

The process by which content is transformed into information about learners to feed learner models in xLM. Thick arrows represent information flow whereas thin arrows represent relationships between elements.

Figure 1 illustrates the process by which xLM gets information concerning learner interaction with educational content. In essence, content encoded in LeActiveMath’s mathematical content representation language OMDoc (Kohlhase, 2006) is transformed in a presentation language (HTML, MathML or PDF) using style-sheets and related technologies. Some of the content items and their presentations allow learners to interact with them in a way that the interaction can be captured by LeActiveMath and reported to xLM in the form of event messages containing data such as learner identifier, content item identifier, type of event reported and additional information such as (for some events) a measure of learner performance.

A variation of this scheme consists in the introduction of additional components acting as diagnosers of learner behaviour, which evaluate what happens during the interaction of learners with content and produce judgements on learners' states and dispositions. Examples of such additional diagnosers include an assessment tool that produces judgements on learners' levels of competency, a self-report tool through which learners provide judgements on their own affective states, and a situational model that produces judgements on learners' motivational state. A further variation of the scheme consists in learners interacting with their learner models instead of interacting with educational content. The models are made available through an xLM component called the extended Open Learner Model (xOLM) which provides learners with a graphical user interface to their models. It includes facilities for inspecting and challenging beliefs held in the models and the evidence supporting them. xOLM acts also as a diagnoser, producing judgements on learners' levels of meta-cognition.

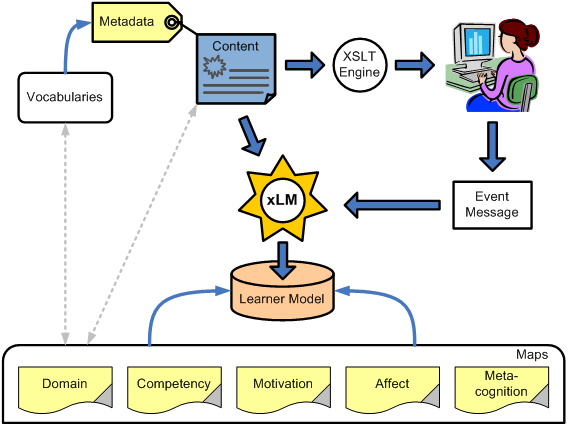

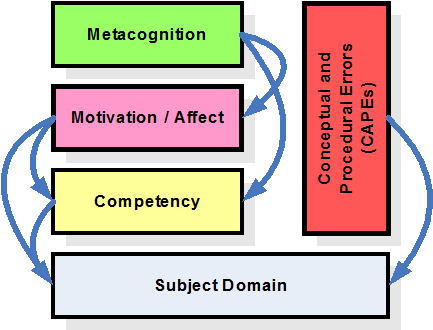

Figure 2

The process of interpreting events for producing evidence for beliefs in a learner model. Arrows represent information flow.

Once xLM receives an event message, it proceeds to interpret it using the event handler that corresponds to the type of event reported in the message (Figure 2). The event handler uses the identifier of the content item, as reported in the message, to recover the item metadata that sets the context for interpreting the rest of the message. In particular, metadata provides information to identify the domain topics and competencies related to the event, while additional data in the event message helps to identify related affective and motivational factors, if any. Armed with all this information, the event handler produces evidence to update a selection of beliefs in a learner model, as identified by their belief descriptor: a juxtaposition of six identifiers, one for each of the learner dimensions modelled by xLM,

〈domain topic, misconception, competency, affective disposition, motivational disposition, metacognition〉.

Each element in a belief descriptor must either be empty or appear in the concept map that specifies the internal structure of the corresponding dimension in the learner models (see bottom of Figure 1). It is the composition of these maps, in the predefined way illustrated in Figure 3, what rules the composition of belief descriptors and defines the overall structure of learner models in xLM. The structure of the maps is used by propagators to spread the evidence produced by event handlers through the network of beliefs, producing in the end a relatively large collection of indirect evidence for a broader selection of beliefs than the ones directly addressed by the event. The final step in the process of learner modelling is updating the beliefs in the learner model in the light of the new evidence accumulated.

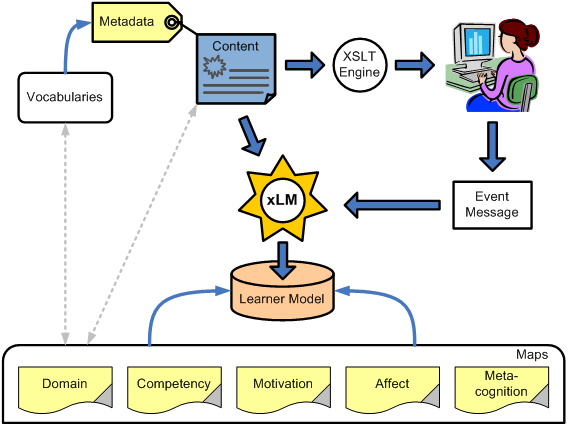

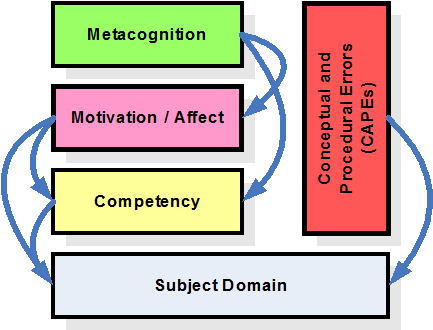

Figure 3

The layered structure of learner models determines the possible combinations of dimensions (the application of upper layers to lower layers) in learner model beliefs.

Learner Modelling Example

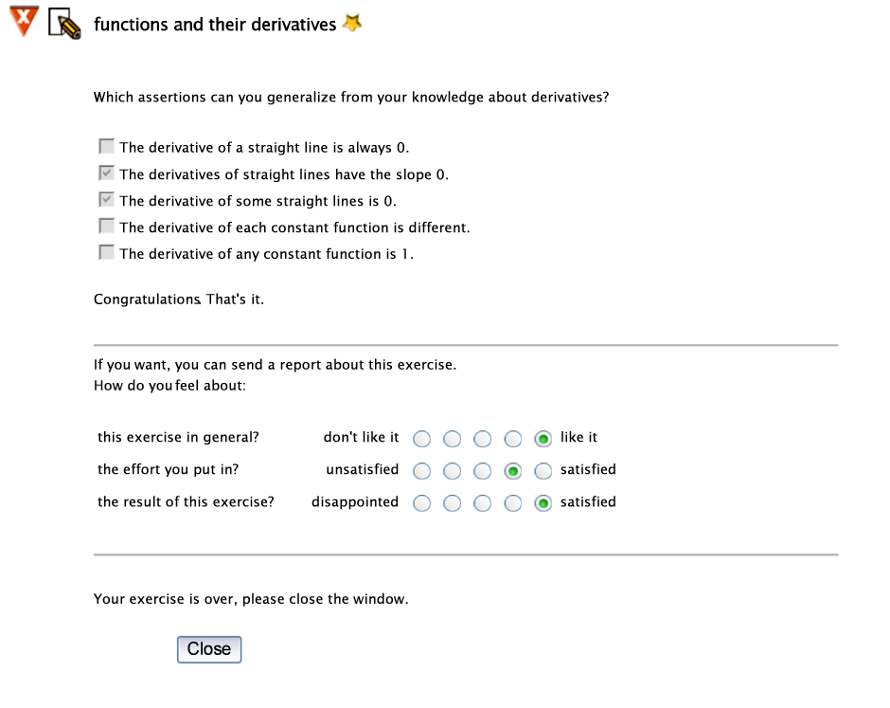

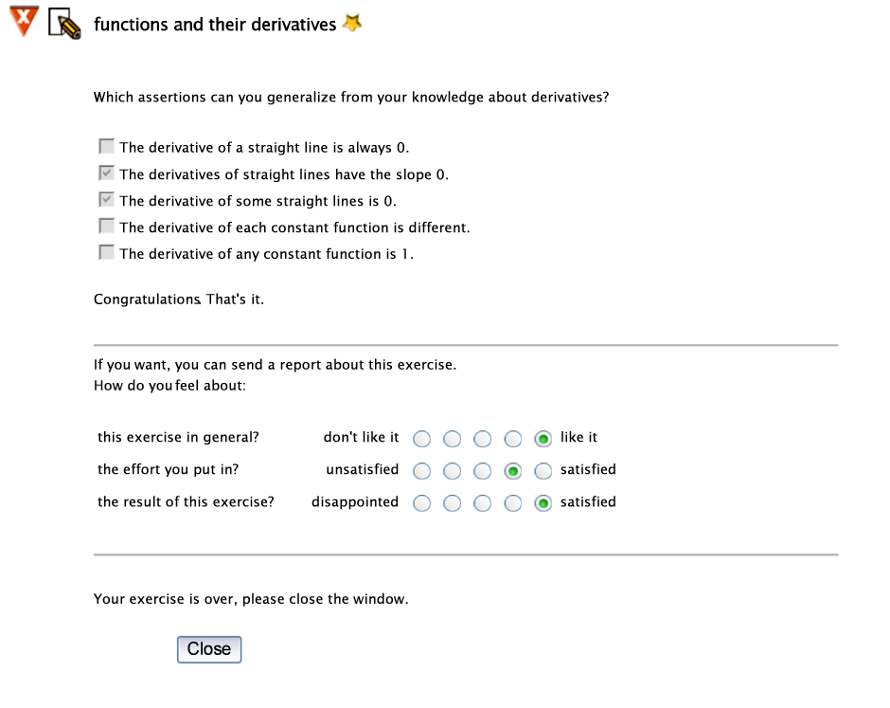

To illustrate what has been explained above, let us consider the case of a learner that is studying Differential Calculus and has finished the exercise on differentiation of linear functions shown in Figure 4. xLM receives an event message reporting that the learner has just finished successfully the exercise identified as mbase://LeAM_calculus/exercisesDerivs/mcq_const_lin_derivs

Figure 4

Example of the exercise and self-report tool in LeActiveMath.

Then xLM requests the exercise metadata and receives the following information, among other:

- Exercise is for content items ex_diff_const. and ex_diff_lin, which are examples for differentiating a constant function and differentiating a learner function, respectively.

- Difficulty: very easy.

- Competency: think mathematically.

- Competency level: simple conceptual.

Subsequently, xLM goes from the exercise to the pair of examples of differentiation, to definitions of the corresponding differentiation rules, and so on up to the nodes diff_quotient, deriv_pt and derivative in the map of the subject domain, which stand for the domain topics of difference quotient, derivative at a point and derivative, respectively. With this information, xLM can now construct the descriptors for the beliefs the exercise provides new evidence for:

〈diff_quotient,_,think,_,_,_〉, 〈deriv_pt,_,think,_,_,_〉 and 〈derivative,_,think,_,_,_〉.

The beliefs corresponding to these belief descriptors are all on the competency level of the learner to think mathematically on/with the topics trained or tested by the exercise. Information on the difficulty and competency level of the exercise and the success rate achieved by the learner is used to calculate probabilities for the learner being at any of four possible competency levels. These probabilities are then transformed into a belief function (Shafer, 1976), a numeric formalism for representing beliefs that generalises probabilities and allows for a better representation of ignorance (lack of evidence) and conflict (conflicting evidence). Belief functions are the formalism used by xLM to represent its beliefs and their supporting evidence (Morales, Van Labeke, & Brna, 2006).

The initial set of direct evidence (three pieces, one for each belief) is sent as input to propagators, which produce new pieces of indirect evidence for beliefs with descriptors such as 〈differentiation,_,think,_,_,_〉, propagating on the domain map, and 〈derivative,_, judge,_,_,_ 〉, propagating on the competency map.

The learner's self-report of their affective state (bottom of Figure 4) would be delivered to xLM in another event message and then used to infer new evidence for beliefs on the affective dispositions of the learner towards domain topics and mathematical competencies, with descriptors such as 〈diff_quotient,_,_,liking,_,_ 〉 and 〈differentiation,_,think,affect,_,_ 〉.

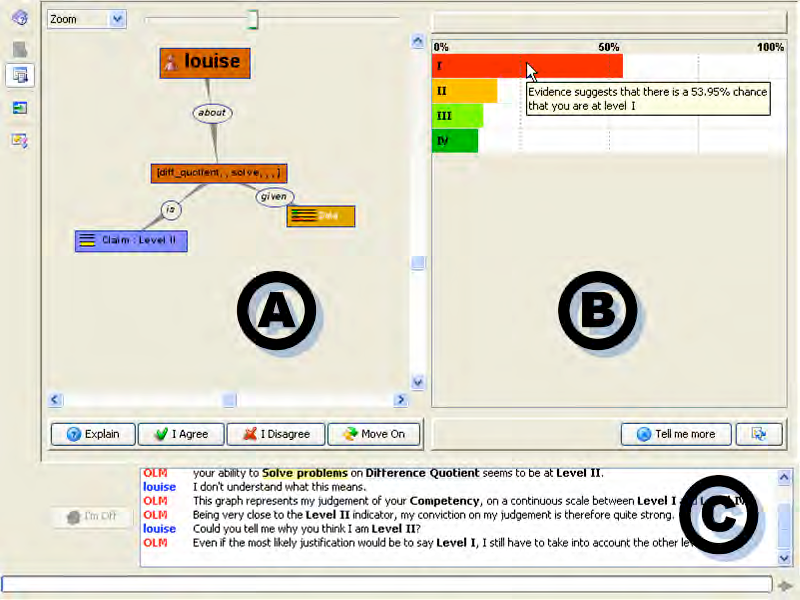

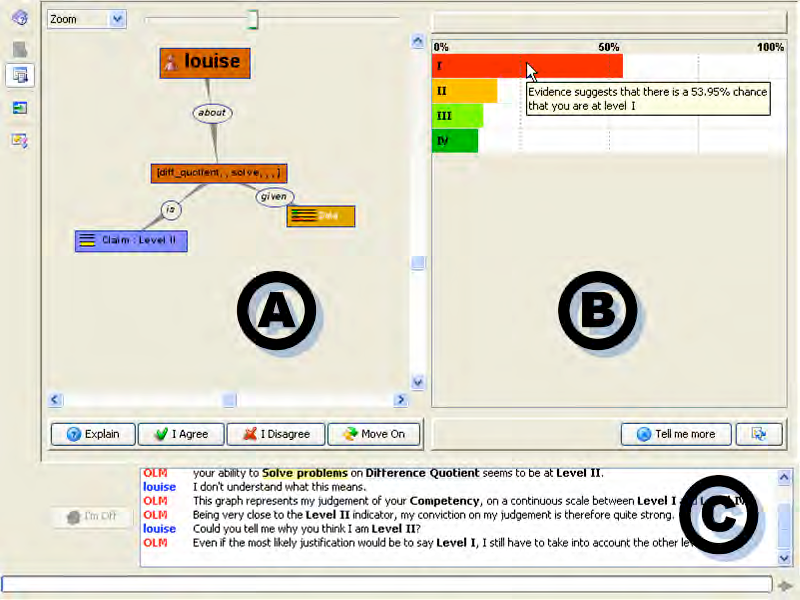

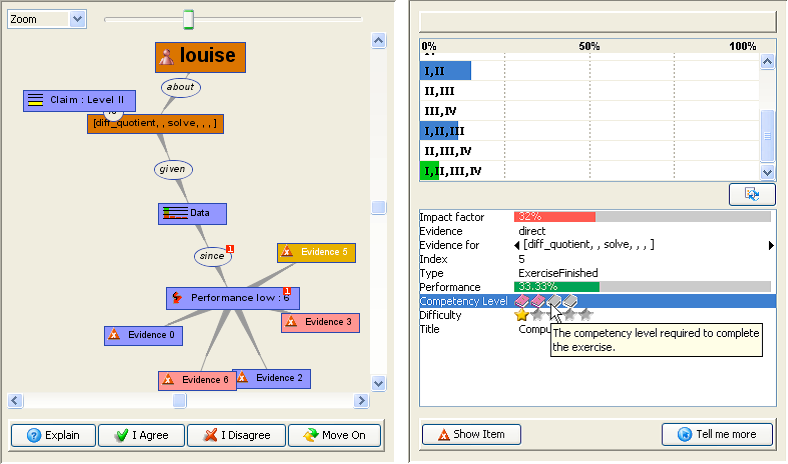

Learner Model Inspection and Challenge

xLM provides LeActiveMath users with facilities for inspecting their learner models and for challenging beliefs hold in them. This has been accomplished via a dedicated graphical user interface (Figure 5) that allows learners to navigate through the web of beliefs and evidence built by xOLM over the user's interaction with LeActiveMath, as described in the previous section.

Figure 5

xOLM graphical user interface to learner models.

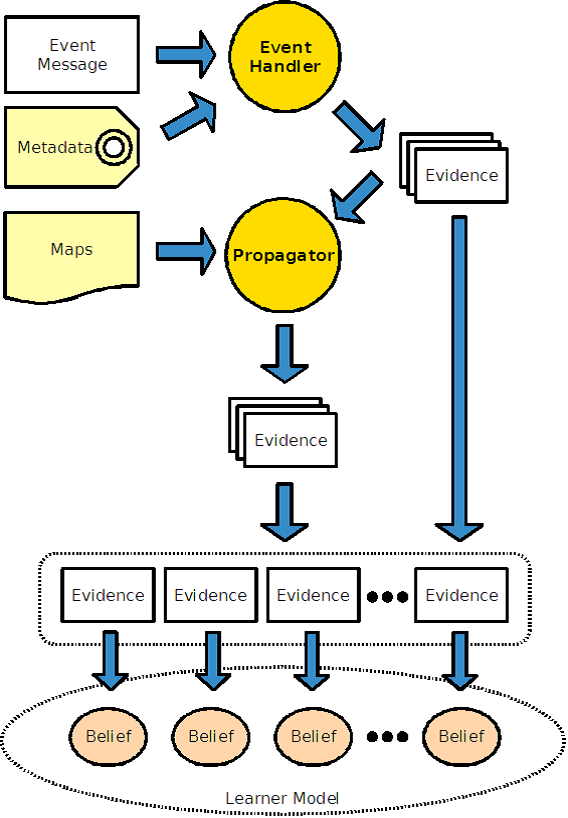

Toulmin Argumentation Pattern

The complexity of such a modelling process requires a mechanism to control the delivery of all this information in a way that maintains its significance. In xOLM, this mechanism is inspired by the Toulmin Argumentation Pattern (Toulmin, 1959) which, besides its (superficial) simplicity, does provides us with the possibility for managing both the exploration of the Learner Model and the challenge of its judgements. It also quite nicely supports a dynamic reorganisation of the evidence that helps to establish and clarify the justifications presented to the learner.

In xOLM, the mapping between each element of Toulmin's pattern (see Figure 6) and elements of xLM’s internal representations is as follow:

- The Claim is associated with a summary belief; that is a short, straightforward judgement about the learner’s ability, or other disposition, on a given topic (i.e. “I think you are Level II on mathematical thinking on derivatives”).

- The Data (or Grounds) is associated with a full belief, represented both by its pignistic probability function, its simplest internal encoding, and its mass function, its full internal encoding (Morales et al., 2006).

- Warrants are associated with the evidence supporting the belief, represented by mass functions. There will be one warrant for every piece of evidence used by xLM to build its current belief.

- Backings are associated with the attributes, both qualitative and quantitative, of the events whose interpretation has produced the evidence supporting the belief. Backings and warrants come in pairs for a single belief, although the same backing may be associated with distinct beliefs.

It has to be noted that xOLM does not consider any evidence gathered by xLM as Rebuttal, since any evidence in a learner model is supporting its corresponding belief. Rebuttal in xOLM exists only momentarily, as an explicit challenge from the learner to a belief held in the learner model, expressed through the graphical interface. However, once incorporated into the adjusted belief in the learner model, even the learner’s challenge is evidence for the new belief and hence becomes a warrant.

Inspection and Challenge in the User Interface

The presentation of a belief – and its ultimate justification step-by-step – is controlled by the dual view, as seen in Figure 5: the Argument view (labelled A) and the Component view (labelled B). A verbalisation of the interaction between the learners and xOLM (labelled C) also acts as a complementary source of information and support.

The purpose of the Argument view is twofold: to provide the user both with a representation of the logic of the justification of the judgement made by xOLM and with an interface to navigate between the various external representations associated with each component of the justification. It is a direct reification of Toulmin's pattern, represented under the appearance of a dynamic and interactive graph. Each of the nodes of the graph is associated with one of the component of the argumentation pattern: the claim node associated with the summary belief, the data node associated with the belief itself, the warrant and backing nodes associated with individual evidence, etc. The shapes, colours, labels and icons of the nodes are appropriately designed in order to provide a quick and unambiguous identification of the corresponding element.

The Toulmin nodes are reactive to learners’ interaction, acting as a trigger for the next step of the exploration. Upon selection, two actions are taking place: first, the appropriate external representation is immediately displayed in the right-hand side Component view; second, the Toulmin’s pattern in the left-hand side is expanded to provide learners access to the next layer of the argumentation. Note that some intermediary nodes, not reactive to learner’s interaction, have been added to introduce meaningful associations between the important parts of the graph (e.g. “about”, “given”, etc.); they are mostly linguistic add-ons for improving both the readability and the layout of the graph.

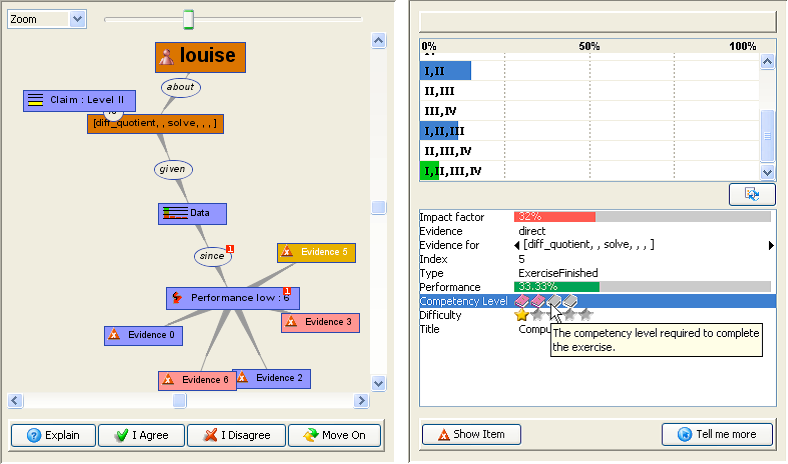

A mock interaction between a learner and xOLM is shown in Figure 7. It represents steps in the inspection-justification of a belief. Every step of the discussion is made manifest as a result of the learner requesting explanations as to why the xOLM made its judgement. At the interface level, the expansion of the Toulmin’s pattern, combined with an external representation of the current component of the argumentation (see Figure 8), gives the learners the possibility to inspect – and ultimately challenge – several aspects of the xLM. A more detailed description of the interface can be found in (Van Labeke et al., 2007).

Figure 8

An expanded Toulmin’s Argumentation Pattern, side-by-side with a dedicated external representation of the Warrant/Backing component.

Design and Implementation Issues

Many things need to work together for the process described in the previous section to run smoothly. There are many decision points where trade-offs have been made between efficiency, generality, flexibility and availability of resources within the project.

Knowledge vs. Content

From the beginning of the project there were divergences regarding the nature of the material developed for the project in OMDoc. From one viewpoint, it can be seen close to mathematical knowledge, given OMDoc’s focus on mathematical meaning, rather than on visual presentation. From another viewpoint, the semantic nature of OMDoc is mediated by the nature of the documents it encodes and the processing capabilities of the interpreters. Formal mathematical documents encoded in OMDoc should be written with consistency and completeness in mind, since their purpose is to represent knowledge that can be verified, proved and otherwise interpreted and used by computers. On the other hand, educational mathematical documents are written pedagogically, their purpose being to provoke learning experiences. Educational documents can be rather inconsistent, repetitive and incomplete, even on purpose if that is believed to improve their pedagogical effect.

The issue got acute when it came to decide the shape for the subject domain map in xLM. One possibility was to build the maps from content items, so that content items (e.g. OMDoc concepts and symbols) were subjects of beliefs. On one hand, the approach is quick and simple, and it is the one used by ActiveMath’s old learner model. Authoring of new content would automatically update the map and every author could define topics for xLM to model learners on. Nevertheless, it is an approach prone to inconsistencies, repetitions and incompleteness in terms of learner models, very much as content could be. Another possibility was to develop an explicit ontological/conceptual map of the subject domain, as a more stable framework for xLM to ground beliefs on (Kay & Lum, 2004). Given the lack of a domain expert embedded in the LeActiveMath system, able to interpret content and answer questions about it, a map of the domain would deliver part of the hidden, implicit content semantics. A map of the domain could help authors to better describe their content by making explicit references to the relevant parts of the map. On the other hand, since any subject domain can be described from many viewpoints, there can be many different, even conflicting maps of just about anything. A third option was to use a collection of content dictionaries written in OpenMath (Buswell, Caprotti, Carlisle, Dewar, & Kohlhase, 2004) – the formal, XML-based mathematical language on which OMDoc is based. However, this option was discarded because the content dictionaries were found inadequate, both in terms of the topics covered and the relationships between them. Consequently, a separate concept map for the subject domain was the implementation decision of choice for xLM, and so a hand-crafted domain map was implemented as part of xLM which covers a subset of Differential Calculus – the main subject domain of LeActiveMath – and includes a mapping from content items to the relevant concepts, if available. It provides a solid ground for learner modelling which is less sensitive to changes in content.

Maps and Vocabularies

There is a weak relationship in between the map for competencies used by xLM and the vocabularies used for specifying the relevant competencies in content metadata. Certainly they are based on the same framework (OECD, 2003) and care has been taken to coincide, but this coincidence does not derive from any explicit link between them.

The mapping from content to topics is currently hardwired into the implementation of the handcrafted domain map, or generated dynamically at start up. In either case, it is hidden from content authors. In the same way, knowledge about vocabularies for metadata such as difficulty and competency level is hardwired into the code of xLM, particularly in event handlers and diagnosers such as the Situational Model and the Open Learner Model. There is no explicit link between this knowledge and the definition of the vocabularies.

Metadata and its usage

A core but limited subset of the available content metadata is actually taken into account while interpreting events. It is quite possible that making use of more metadata may provide knowledge of additional important features of content; features that can be the reasons behind apparently contradictory evidence. Nonetheless, since most metadata for the current LeActiveMath content has been produced based on the subjective appreciation of their authors, rather than on empirical evaluation of content, it may in reality provide very little extra information (coming from the same, biased source) and could be misleading.

Propagation Algorithm

A learner model in xLM is a large belief network constructed by composition of the maps that define the distinct dimensions of learners to be modelled (Figure 3). Every belief and evidence in this network is represented as a belief function. Propagators, that make use of the internal structure of the maps to propagate evidence, require the definition of a conditional belief function per association between elements in the maps. In the current implementation of xLM, however, a single conditional belief function is used for all associations in all maps, despite their many different types.

A careful analysis of the maps and the propagation algorithm is necessary to determine suitable adjustments. On the same line, there are a few parameters that can be fine tuned to optimise xLM performance in terms of accuracy, reliability and efficiency. Of particular interest is the issue of performance with larger maps.

Dynamic vs. static learner models

Most of xOLM external representations provide the learners with an overview of the current state of the learner model (belief by belief) but not of its evolution across time. Although some attempts to give access to the dynamics of learner models have been tried, it became evident that they raised more issues than they solved; among them, the question of consistency across external representations (e.g. how to represent the dynamics of complex information such as that encoded in the pignistic function, the mass distribution, etc.); the question of controlling the dynamic representation (e.g. replaying, going backward, stopping, etc.); the question of integrating representations of dynamic and static aspects of learner models (e.g. selecting a step of a process to access the related evidence).

As with most learner models, an assumption underlying the implementation of xOLM is that the interest of the learners will be on the actual state of the beliefs rather than on their trajectories. This assumption needs to be carefully challenged in the future, by introducing dynamic aspects of xLM wherever they are likely to provide different information and support different (and improved) reflection by learners.

Support for inspection of learner models

Inspecting a learner model is a complex task, so finding an adequate paradigm for this activity and producing a supportive interface is an important issue to address. Such an interface should allow learners to easily search for and localize beliefs in learner models. In fact, it should proactively suggest some beliefs to start with, based on what is stored in the learner model. It should present them in context, connected to the rest of the beliefs in the model, at least to be consistent with use of propagation of evidence in learner models, but most importantly for supporting learner metacognition (Flavell, 1979).

The current implementation of xLM includes a simple mechanism for identifying beliefs by their descriptors, plus minimal facilities to see beliefs in context (using a representation of the belief network as a sort of hyperbolic graph), but they should be seen more as proofs of concept than user-friendly facilities of xOLM graphical user interface.

To show or not to show, because it is complex

xOLM was designed with the goal of given learners full access to what is held in learner models, from simple summaries of beliefs to their full representation in a knowledge representation formalism, from the interpretation of the events supporting a belief to the details of such events. A two-fold motivation sustained the goal through the design and implementation process: that the learner has got the right to know, and that having full access to the information in learner models would help learners to understand them better, answering their questions by going deeper into their models. We believed that hiding information from learners and presenting partial information in a simplified way only could have a detrimental effect on learners understanding their models, blurring the rationale behind the (summarised) beliefs. It would make harder for learners to challenge a learner model they did not understand.

There seems to be trade-off between inspectability and readability, which has had an impact on the xOLM interface. It seems to be the case also that the difficulties for learners to understand their models are severe at both extremes (i.e. a heavy bias towards either inspectability or readability). The best solution seems to lie somewhere around the middle, yet such a solution has yet to be found.

Adaptive open learner modelling

The same rationale that makes us believe that personalisation through proactive adaptation is a necessary ingredient of any system that aims to provide the best learning environment for each individual learner applies to the case of open learner modelling. That is to say, a learner interacting with their learner model will need personalised settings in order to take full advantage of the experience. There are, of course, many aspects of the interaction that can be adapted to the learner, among them the content of the model that is accessible to the learner at a given time, the amount of it that is presented at once, the media, modality and general organisation of its presentation, the navigation support and the stubbornness with which the system defends its beliefs. We are not aware of any research carried out in this direction.

GENERIC OPEN LEARNER MODELLING

We have envisioned a future for xLM in which it can be easily embedded into other educational systems or even deployed as a learner modelling server. There have been a few attempts to do this in the history of research in intelligent tutoring systems (Brooks et al., 2004; Kobsa & Pohl, 1995; Paiva & Self, 1995; Zapata-Rivera & Greer, 2004) with some level of success among the research community but no widespread usage outside of it, yet. Besides the obvious moves of making xLM appealing through its core functionality as an open learner modelling engine, and improving its use of Semantic Web technologies and standards, a proper parameterisation of its components would help xLM to better serve other educational systems. We can examine these issues from the perspective of the open learner modelling process described in the previous section.

To start with, the number of dimensions used by xLM, the way they are combined to set the framework for learner models (Figure 3) and the maps that give each dimension its structure and, combined as dictated by the framework, produce the network of beliefs held in learner models, need to be flexible. The maps should be encoded using a standardised language, such as XTM – XML for Topic Maps (TopicMaps.org, 2001) – and supplied to xLM as parameters. An explicit and strong connection between the maps and vocabularies for metadata would be beneficial too.

Knowledge of the content, structure and semantic of event messages recognisable by xLM (Figure 1) needs to be made explicit and accessible to xLM users (researchers and developers). It amounts to specifying a data model, as in SCORM (ADL, 2004c), plus its intelligent processing. For example, the current implementation of xLM supports event messages reporting log-ins and log-outs, starting and finishing exercises (including a measure of success rate), self-reports of affective states, diagnosis of motivational states and meta-cognitive skills, but all knowledge of which event messages are supported and how to interpret them is hardwired in the code xLM event handlers.

Propagation of evidence in learner models would greatly benefit from specialised conditionals attached to the associations in the concept maps. Consequently, finding an easy way to do this is an important problem. We are exploring a possible solution to it by defining a conditional per association type (Dichev & Dicheva, 2005) and adjusting it case by case, for each individual association on the maps, by taking into account the number of nodes each association connects – the more nodes connected, the conditional gets weaker.

For xOLM, the visible face of xLM, every event, map, metadata and vocabulary has to be provided with (internationalised) descriptions of their various constitutive elements, to be used in the graphical user interface to learner models. These descriptions are needed at various levels, as can be seen in Figure 5). Parameterising the evidence presentation view, particularly of the events whose interpretation delivers the evidence, means that important attributes have to be identified, their names and values to be described, as well as the (graphical) rendering used to display them properly. Parameterising the dialogue view (zone C in Figure 5, a verbalisation of the exchange between the learner and the xOLM) means that a verbal description of xOLM events has to be defined, including the templates to use and their arguments. The description of each argument needs to indicate how it should be formatted in the template. All references to belief elements need to be defined for their externalisation: descriptor, ability levels, and so on. For example, the belief descriptor 〈deriv_pt,_,think,_,_,_〉 needs to be transcribed according to the descriptions in the relevant topic maps (deriv_pt referring to the topic ‘derivative at a point’ in the domain map and think referring to the competency of `mathematical thinking' in the competency map) and abstract ability levels currently used need to be mapped to the relevant vocabularies (e.g. for the case of a competency level, Level II could be transcribed as ‘medium’).

We have presented xLM, the open learner modelling subsystem of a Web-based educational system for mathematics called LeActiveMath. We have described xLM functionality, particularly in relation to its use of technologies related to the Semantic Web, and discussed important design and implementation issues. Due to the fact that we aim at decoupling xLM from the LeActiveMath system so that it can serve a variety of educational systems, we have discussed a minimum set of requirements to accomplish our goal, emphasising the need to parameterise xLM and improve its usage of Semantic Web standards and technologies. Striving to generality has been, together with open learner modelling, a ‘salutary principle’ for xLM (Self, 1988), yet the road ahead is full of challenges.

EVALUATION OF OPEN LEARNER MODELLING

Inspecting an open learner model cannot guarantee improved learning. In the worst case, the learner might be trapped in a cycle of introspection from which they find it hard to escape. In the best case, the learner not only learns the material that they are supposed to learn but also becomes a “better learner”. Evaluation studies can help us map out the ways in which learners with different profiles/characters/background knowledge can benefit from different aspects of open learner models. Following Bull and Kay (2007), we might expect to find evaluations that are focused on one or more of “accuracy; reflection; planning and monitoring; collaboration and competition; navigation; right of access and control as well as issues of improving trust; and assessment”. The methods used need to be varied, experiments with controlled conditions only go so far in terms of revealing the benefits and drawbacks of open learner modelling. Many of the studies focus on aspects of learners that are motivational or attitudinal, hence most studies rely to some extent on self report.

The evaluations of open learner models in use – either through small scale studies or larger ones – are broadly favourable. Bull, Quigley and Mabbott (2006) provide an example of a field evaluation of the deployment of their OLMlets, which have been designed by instructors. The system was used in five university courses in Electronic, Electrical and Computer Engineering. Mitrovic and Martin (2007) provide evidence that a fairly simple open open learner models need to be deployed together with systems that are used widely with real students and in real institutional contexts, evaluations must necessarily go beyond small scale controlled studies. Simpler open learner models have begun to appear in real life settings. However, there are a number of research-based open learner models which have demonstrated promise in small scale studies. Three such systems can be found in a recent special issue of the International Journal of Artificial Intelligence in Education: Tchetagni, Nkambou and Bourdeau (2007) outline a system that seeks to encourage reflection; Van Labeke, Brna and Morales (2007) provide a more detailed exposition of the xOLM system described in this chapter, and Zapata-Rivera et al. (2007) provide an approach to open learner modelling for a range of stakeholders which is strongly focused on issues connected with evidence-based argumentation.

For the xOLM, this has now been subjected to a detailed evaluation which indicated a relationship between confidence and growth in knowledge for learners who had used the xOLM within the LeActivemath environment, suggesting that open learner modelling, in this case, facilitates the learner's meta-cognitive skill (this is reported within Deliverable 44 of the LeActiveMath project by the evaluation team.). There is indeed room for further evaluation studies, but the evidence so far is encouraging.

FUTURE RESEARCH DIRECTIONS

We have argued in this chapter that open learner modelling can perform a critical role in a new breed of intelligent learning environments driven by the aim to support the development of self-management, signification, participation and creativity in learners. We believe that its place in such environments is at the centre, as a main access door and meeting point. Open learner modelling needs to evolve in order to meet this challenge, and our work on the LeActiveMath project can be seen as an initial move in this direction. We have put open learner modelling as an important tool in a web-based, content+metadata system, and have shown how it can take advantage of Semantic Web technologies and sophisticated knowledge representation techniques, well suited for managing knowledge and uncertainty in e-learning environments.

In the previous section we outlined a set of outstanding issues that need to be addressed before we can accomplish our goal of pushing open learner modelling into e-learning. They are exclusive neither to our specific open learner modelling engine nor to the system hosting it. We have also sketched some moves towards making the Extended Learner Model a generic open learner modelling engine for e-learning systems based on standards and international reference models such as SCORM (ADL, 2004a). The road ahead looks bright and full of questions to answer.

REFERENCES

- ADL. (2001). Sharable Content Object Reference Model Version 1.2: The SCORM Overview. Retrieved Nov 25, 2007, from http://adlnet.gov/downloads/AuthNoReqd.aspx?FileName=SCORM_1_2_pdf.zip&ID=240.

- ADL. (2004a). Sharable Content Object Reference Model (SCORM). Retrieved Nov 25, 2007, from http://www.adlnet.gov/dowloads/AuthNotReqd.aspx?FileName=SCORM.2004.3ED.DocSuit.zip &ID=237

- ADL. (2004b). Sharable Content Object Reference Model (SCORM) Overview. Retrieved Nov 25, 2007, from http://www.adlnet.gov/donloads/AuthNotReqd.aspx?FileName=SCORM.2004 .3ED.DocSuite.zip&ID=237

- ADL. (2004c). Sharable Content Object Reference Model (SCORM) Run Time Environment. Retrieved Nov 25, 2007, from http://www.adlnet.gov /dowloads/AuthNotReqd.aspx?FileName= SCORM.2004.3ED.DocSuite.zip&ID=237

- Ausubel, D. P. (1995). Educational Psychology: A Cognitive View. London: Routledge.

- Bates, T. (2005). Technology, e learning and distance education. London: Routledge.

- Brooks, C., Greer, J., Melis, E., & Ullrich, C. (2006). Combining ITS and e-learning technologies: opportunities and challenges. In M. Ikeda, K. Ashley & T-W. Chan (Eds.), Intelligent Tutoring Systems, 8th International Conference, ITS 2006 (pp. 278-287). Berlin: Springer.

- Brooks, C., Winter, C. M., Greer, J., & McCalla, G. (2004). The massive user modelling system (MUMS). In J. C. Lester, R. M. Vicari & F. Paraguaçu (Eds.), Intelligent Tutoring Systems, 7th International Conference, ITS 2004 (pp. 635-645). Berlin: Springer.

- Brusilovsky, P. (2004). KnowledgeTree: A distributed architecture for adaptive e-learning. Paper presented at the 13th International World Wide Web Conference, Alternate Track Papers & Posters.

- Bull, S., & Kay, J. (2007). Student Models that Invite the Learner In: The SMILI Open Learner Modelling Framework. International Journal of Artificial Intelligence in Education, 17(3), 89-120.

- Bull, S., Brna, P., & Pain, H. (1995). Extending the Scope of the Student Model. User Modeling and User-Adapted Interaction, 5(1), 45-65.

- Bull, S., Quigley, S., & Mabbott, A. (2006). Computer-Based Formative Assessment to Promote Reflection and Learner Autonomy. Engineering Education: Journal of the Higher Education Academy Engineering Subject Centre, 1(1), 1-18.

- Buswell, S., Caprotti, O., Carlisle, D.P., Dewar, M. G., & Kohlhase, M. (2004). The OpenMath Standard (Standard): The OpenMath Society.

- Carbonell, J. R. (1970). AI in CAI: An artificial intelligence approach to computer assisted instruction. IEEE Transactions on Man Machine Systems, MMS-11(4), 190-202.

- Cook, R., & Kay, J. (1994). The Justified User Model: A Viewable, explained user model. In Proceedings of the Fourth International Conference on User Modeling (pp. 145 - 150). Hyannis MA, The MITRE Corporation.

- Chan Núñez, M. E. (2004). Modelo mediacional para el diseño educativo en línea. Guadalajara, Jalisco: INNOVA, Universidad de Guadalajara.

- Devedzic, V. B. (2003). Key issues in next-generation Web-based education. IEEE Transactions on Systems, Man, and Cybernetics - Part C: Applications and Reviews, 33(3), 339-349.

- Dichev, C., & Dicheva, D. (2005). Contexts in Educational Topic Maps. In C.K. Looi, G. McCalla, B. Bredeweg & J. Breuker (Eds.), 12th International Conference on Artificial Intelligence in Education (pp. 789–791). Amsterdam: IOS Press.

- Flavell, J. H. (1979). Metacognition and Cognitive Monitoring: A New Area of Cognitive- Development. American Psychologist, 34(10), 906-911.

- Freire, P. (1999). Psicología del Oprimido. México City: Editorial Siglo XXI.

- Gibbons, A. S., & Fairweather, P. G. (2000). Computer-based instruction. In S. Tobias & J. D. Fletcher (Eds.), Training & Retraining: A Handbook for Business, Industry, Government, and the Military: MacMillan Reference Books.

- Gonczi, A., & Athanasou, J. (1996). Instrumentación de la educación basada en competencias. Perspectivas de la teoría y la práctica en Australia. In A. Argüelles (Ed.), Competencia laboral y educación basada en normas de competencia (pp. 265-288). México: Limusa y Noriega Editores.

- IEEE. (2002). 1484.12.1 Draft Standard for Learning Object Metadata.

- Kay, J., & Lum, A. (2004). Ontologies for scrutable student modelling in adaptive e-learning. In L. Aroyo & D. Dicheva (Eds.), Proceedings of the AH (Adaptive Hypermedia and Adaptive Web- Based Systems) 2004 Workshop on Applications of Semantic Web Technologies (pp. 292-301). Department of Mathematics and Computer Science, TU/e Technische Universiteit Eindhoven, 2004.

- Kobsa, A., & Pohl, W. (1995). The BGP-MS User Modeling System. User Modeling and User- Adapted Interaction, 4(2), 59-106.

- Kohlhase, M. (Artist). (2005). OMDoc: An Open Markup Format for Mathematical Documents

- Kohlhase, M. (2006). OMDoc – An Open Markup Format for Mathematical Documents (version 1.2): Springer Verlag.

- LeActiveMath Consortium. (2007). Language-Enhanced, User-Adaptive, Interactive eLearning for Mathematics. Retrieved June 22, 2007, from http://www.leactivemath.org

- Melis, E., Andrès, E., Büdenbender, J., Frischauf, A., Gogaudze, G., Libbrecht, P., et al. (2001). ActiveMath: A Generic and Adaptive Web-Based Learning Environment. International Journal of Artificial Intelligence in Education, 12, 385-407.

- Mitrovic, A., & Martin, B. (2007). Evaluating the Effect of Open Student Models on Self-Assessment. International Journal of Artificial Intelligence in Education, 17(3), 121-144.

- Morales, R., Van Labeke, N., & Brna, P. (2006). Approximate Modelling of the Multi-dimensional Learner. In M. Ikeda, K. Ashley, & T-W. Chan (Eds.), Intelligent Tutoring Systems, 8th International Conference, ITS 2006 (pp. 555-564). LNCS 4053. Berlin: Springer-Verlag.

- Morales, R., Pain, H., Bull, S., & Kay, J. (1999). Open, interactive, and other overt approaches to learner modelling. AIED Resources, 10, 1070-1079.

- OECD. (2003). The PISA 2003 Assessment Framework: Organisation for Economic Co-Operation and Development. Paris, France: OECD Press.

- Paiva, A., & Self, J. A. (1995). TAGUS - A User and Learner Modeling Workbench. User Modeling and User-Adapted Interaction, 4(3), 197-226.

- Picard, R. W., Kort, B., & Reilly, R. (2007). Exploring the Role of Emotion in Propelling the SMET Learning Process. NSF Project Summary and Description Retrieved Nov 23, 2007, from http://affect.media.mit.edu/projectpages/lc/nsf1.html

- Polson, M. C., & Richardson, J. J. (Eds.). (1988). Foundations of Intelligent Tutoring Systems. New Jersey: Lawrence Erlbaum Associates.

- Reigeluth, C. M. (1999). Instructional-Design Theories and Models: A New Paradigm of Instructional Theory: Lawrence Erlbaum Associates.

- Self, J. (1988). Bypassing the intractable problem of student modelling. In C. Frasson (Ed.), Proceedings of the 1st International Conference on Intelligent Tutoring Systems ITS'88 (pp. 18-24). Montreal, Canada.

- Self, J. (1999). The Defining Characteristics of Intelligent Tutoring Systems Research: ITSs Care, Precisely. International Journal of Artificial Intelligence in Education, 10, 350-364.

- Shafer, G. (1976). A Mathematical Theory of Evidence: Princeton University Press.

- Tchetagni, J., Nkambou, R., & Bourdeau, J. (2007). Explicit Reflection in Prolog-Tutor. International Journal of Artificial Intelligence in Education, 17(3), 169-215.

- TopicMaps.org. (2001). XML Topic Maps (XTM) 1.0 (Specification): TopicMaps.org.

- Toulmin, S. (1959). The Uses of Arguments: Cambridge University Press.

- UDG Virtual. (2004). El modelo educativo de UDGVirtual. Guadalajara, Jalisco: Universidad de Guadalajara.

- Van Labeke, N., Brna, P., & Morales, R. (2007). Opening up the Interpretation Process in an Open Learner Model. International Journal of Artificial Intelligence in Education, 17(3), 305-338.

- Vygotsky, L. (1996). Thought and Language: Cambridge, MA: MIT Press.

- Wenger, E. (1987). Artificial intelligence and tutoring systems. California: Morgan Kaufmann.

- Zapata-Rivera, J.-D., & Greer, J. (2004). Inspectable Bayesian Student Modelling Servers in Multiagent Tutoring Systems. International Journal of Human-Computer Studies, 61(535-563).

- Zapata-Rivera, D., Hansen, E., Shute, V. J., Underwood, J. S., & Bauer, M. (2007). Evidence-based Approach to Interacting with Open Student Models. International Journal of Artificial Intelligence in Education, 17(3), 273-303.

ADDITIONAL READING

- Anderson, J. R., Boyle, C. F., Corbett, A. T., & Lewis, M. W. (1990). Cognitive Modeling and Intelligent Tutoring. Artificial Intelligence, 42, 7-49.

- Atif, Y., Benlamri , R., & Berri, J. (2003). Learning objects based framework for self-adaptive learning. Educational and Information Technologies 8(4), 345-368. Kluwer Academic Publishers.

- Bateman, S., Brooks, C., & McCalla, G. (2006). Collaborative tagging approaches for ontological metadata in adaptive e-learning systems. In D. Dicheva & L. Aroyo (Eds.), SW-EL’06: Fourth Workshop on Application of Semantic Web Technologies for Adaptive Educational Hypermedia, Dublin, Eire, June 2006.

- Brooks, C., & McCalla, G. (2006). Towards flexible learning object metadata. International Journal on Continuous Engineering Education and Lifelong Learning 16(1/2), 50-63.

- Brooks, C., Kettel, L. & Hansen, C. (2005). Building a learning object content management system. In Proceedings of World Conference on E-Learning in Corporate, Government, Healthcare & Higher Education 2005 (pp. 2836-2843). Charlottesville, VA: Association for the Advancement of Computing in Education.

- Brooks, C., McCalla, G., & Winter, M. (2005). Flexible learning object metadata. In L. Aroyo & D. Dicheva (Eds.), Proceedings of Workshop SW-EL’05: Applications of Semantic Web Technologies for E-Learning (pp. 1-8). International Conference on Artificial Intelligence in Education 2005, Amsterdam, The Netherlands.

- Brusilovsky, P., & Nijhavan, H. (2002). A framework for adaptive e-learning based on distributed reusable learning activities. Proceedings of World Conference on E-Learning in Corporate, Government, Healthcare & Higher Education 2002 (pp. 154-161). Charlottesville, VA: Association for the Advancement of Computing in Education.

- Conati, C., Gertner, A., & VanLehn, K. (2002). Using Bayesian Networks to Manage Uncertainty in Student Modeling. User Modeling and User-Adapted Interaction, 12(4), 371-417.

- Conlan, O., Hockemeyer, C., Lefrere, P., Wade, V., & Albert, D. (2001). Extending educational metadata schemas to describe adaptive learning resources. Proceedings of the Twelfth ACM Conference on Hypertext and Hypermedia, pp. 161-162. Association for Computing Machinery.

- Denaux, R., Dimitrova, V., & Aroyo, L. (2004). Interactive ontology-based user modeling for personalized learning content management. In L. Aroyo & D. Dicheva (Eds.), Proceedings of the AH (Adaptive Hypermedia and Adaptive Web-Based Systems) 2004 Workshop on Applications of Semantic Web Technologies (pp. 338-347). Department of Mathematics and Computer Science, TU/e Technische Universiteit Eindhoven, 2004.

- Dicheva, D., Sosnovsky, S., Gavrilova, T., & Brusilovsky, P. (2005). Ontological web portal for Educational Heift, T., & Schulze, M. (2003). Student modeling and ab initio language learning. System 31 (4), 519–535.

- Kasai, T., Yamaguchi, H., Nagano, K., & Mizoguchi, R. (2005). A semantic web system for helping teachers plan lessons using ontology alignment. In L. Aroyo & D. Dicheva (Eds.), Proceedings of Workshop SW-EL’05: Applications of Semantic Web Technologies for E-Learning (pp. 9-17). International Conference on Artificial Intelligence in Education 2005, Amsterdam, The Netherlands.

- Kumar, A. (2005) Rule-based adaptive problem generation in programming tutors and its evaluation. In P. Brusilovsky, R. Conejo & E. Millán (Eds.), Proceedings of Workshop on Adaptive Systems for Web-Based Education: Tools and Reusability (pp. 35-43). International Conference on Artificial Intelligence in Education 2005, Amsterdam, The Netherlands.

- Lin, F., Holt, P., Leung, S., Hogeboom, M., & Cao, Y. (2004). A multi-agent and service-oriented architecture for developing integrated and intelligent Web-based education systems. In L. Aroyo & D. Dicheva (Eds.), Proceedings of the ITS 2004 Workshop on Applications of Semantic Web Technologies. Department of Mathematics and Computer Science, TU/e Technische Universiteit Eindhoven, 2004.

- Liu, J., & Greer, J. (2004). Individualized selection of learning object. In L. Aroyo & D. Dicheva (Eds.), Proceedings of the ITS 2004 Workshop on Applications of Semantic Web Technologies. Department of Mathematics and Computer Science, TU/e Technische Universiteit Eindhoven, 2004.

- Moodie, P., & Kunz, P. (2003). Recipe for an intelligent learning management system (iLMS). In R. Calvo & M. Grandbastien (Eds.), Proceedings of Workshop Towards Intelligent Learning Management Systems (Supplemental Proceedings Volume 4). International Conference on Artificial Intelligence in Education 2003, Sydney, Australia.

- Muñoz, L.S., & Moreira de Oliveira, J. P. (2004). Applying Semantic Web technologies to achieve personalization and reuse of content in educational adaptive hypermedia systems. In L. Aroyo & D. Dicheva (Eds.), Proceedings of the AH (Adaptive Hypermedia and Adaptive Web-Based Systems) 2004 Workshop on Applications of Semantic Web Technologies (pp. 338-347). Department of Mathematics and Computer Science, TU/e Technische Universiteit Eindhoven, 2004.

- Nuzzo-Jones, G., Walonoski, J. A., Heffernan, N.T., & Livak, T. (2005). The eXtensible Tutor architecture: a new foundation for ITS. In P. Brusilovsky, R. Conejo & E. Millán (Eds.), Proceedings of Workshop on Adaptive Systems for Web-Based Education: Tools and Reusability (pp. 1-7). International Conference on Artificial Intelligence in Education 2005, Amsterdam, The Netherlands.

- Santos, O.C., Rodríguez, A., Guadioso, E., & Boticario, J. (2003). Helping the tutor to manage a collaborative task in a web-based learning environment. In R. Calvo & M. Grandbastien (Eds.), Proceedings of Workshop Towards Intelligent Learning Management Systems (Supplemental Proceedings Volume 4). International Conference on Artificial Intelligence in Education 2003, Sydney, Australia.

- Schaverien, L. (2003). Re-conceiving “intelligence” in learning management systems: tuning learning to theory. In R. Calvo & M. Grandbastien (Eds.), Proceedings of Workshop Towards Intelligent Learning Management Systems (Supplemental Proceedings Volume 4). International Conference on Artificial Intelligence in Education 2003, Sydney, Australia.

- Specht, M., Kravcik, M., Klemke, R., Pesin, L., & Huttenhain, R. (2002). Adaptive learning environment for teaching and learning in WINDS. In P. De Bra, P. Bruslovsky & R. Conejo (Eds.), Adaptive Hypermedia and Adaptive Web-Based Systems, Second International Conference, AH 2002 (pp. 572-575). Lecture Notes in Computer Science 2347. Berlin: Springer.

- Suraweera, P., Mitrovic, A., & Martin, B. (2004). The use of ontologies in ITS domain knowledge authoring. In L. Aroyo & D. Dicheva (Eds.), Proceedings of the ITS 2004 Workshop on Applications of Semantic Web Technologies. Department of Mathematics and Computer Science, TU/e Technische Universiteit Eindhoven, 2004.

- Trella, M., Carmona, C., & Conejo, R. (2005). MEDEA: an open service-based learning platform for developing intelligent educational systems for the Web. In P. Brusilovsky, R. Conejo & E. Millán (Eds.), Proceedings of Workshop on Adaptive Systems for Web-Based Education: Tools 24 and Reusability (pp. 27-34). International Conference on Artificial Intelligence in Education 2005, Amsterdam, The Netherlands.

- Winter, M., Brooks, C., & Greer, J. (2005). Towards best practices for Semantic Web student modelling. In C.K. Looi, G. McCalla, B. Bredeweg & J. Breuker (Eds.), Artificial Intelligence in Education - Supporting Learning through Intelligent and Socially Informed Technology (pp. 694- 701). Amsterdam: IOS Press.

- Yacef, K. (2003). Some thoughts on the synergetic effects of combining ITS and LMS technologies for the service of Education. In R. Calvo & M. Grandbastien (Eds.), Proceedings of Workshop Towards Intelligent Learning Management Systems (Supplemental Proceedings Volume 4). International Conference on Artificial Intelligence in Education 2003, Sydney, Australia.Heift, T., & Schulze, M. (2003). Student modeling and ab initio language learning. System 31 (4), 519–535.